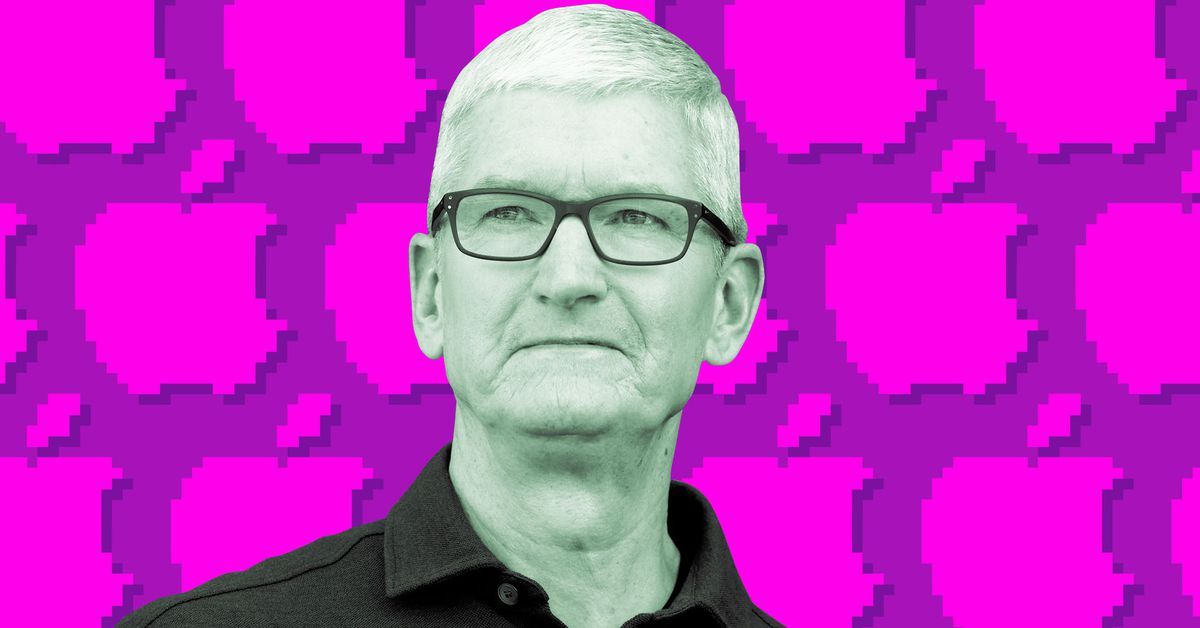

Apple CEO Tim Cook has acknowledged that the company’s new Apple Intelligence system, which brings AI features to iPhones, iPads, and Macs, may not be 100% accurate and could potentially generate false or misleading information, known as AI hallucinations. Despite being confident in the system’s quality, Cook admitted that there is always some level of uncertainty, citing examples of other AI systems making mistakes, such as Google’s Gemini-powered AI and ChatGPT. Apple is taking steps to mitigate these risks, including partnering with OpenAI to integrate ChatGPT into Siri, with disclaimers to prompt users to verify the accuracy of the information, and potentially working with other AI companies, including Google, in the future.

Summarized by Llama 3 70B

I am 100% sure that no one can stop “AI hallucinations” because everything a language model outputs is just a hallucination that met some sort of confidence threshold. Confidence does not equal truth.

Dude this most recent tech bro scramble is ridiculous. Google and Apple are fully aware and accepting the fact that their stupid LLMs are built for verisimilitude, not accuracy, yet they’re shoehorning it into everything and just accepting that it’ll fuck with their users…