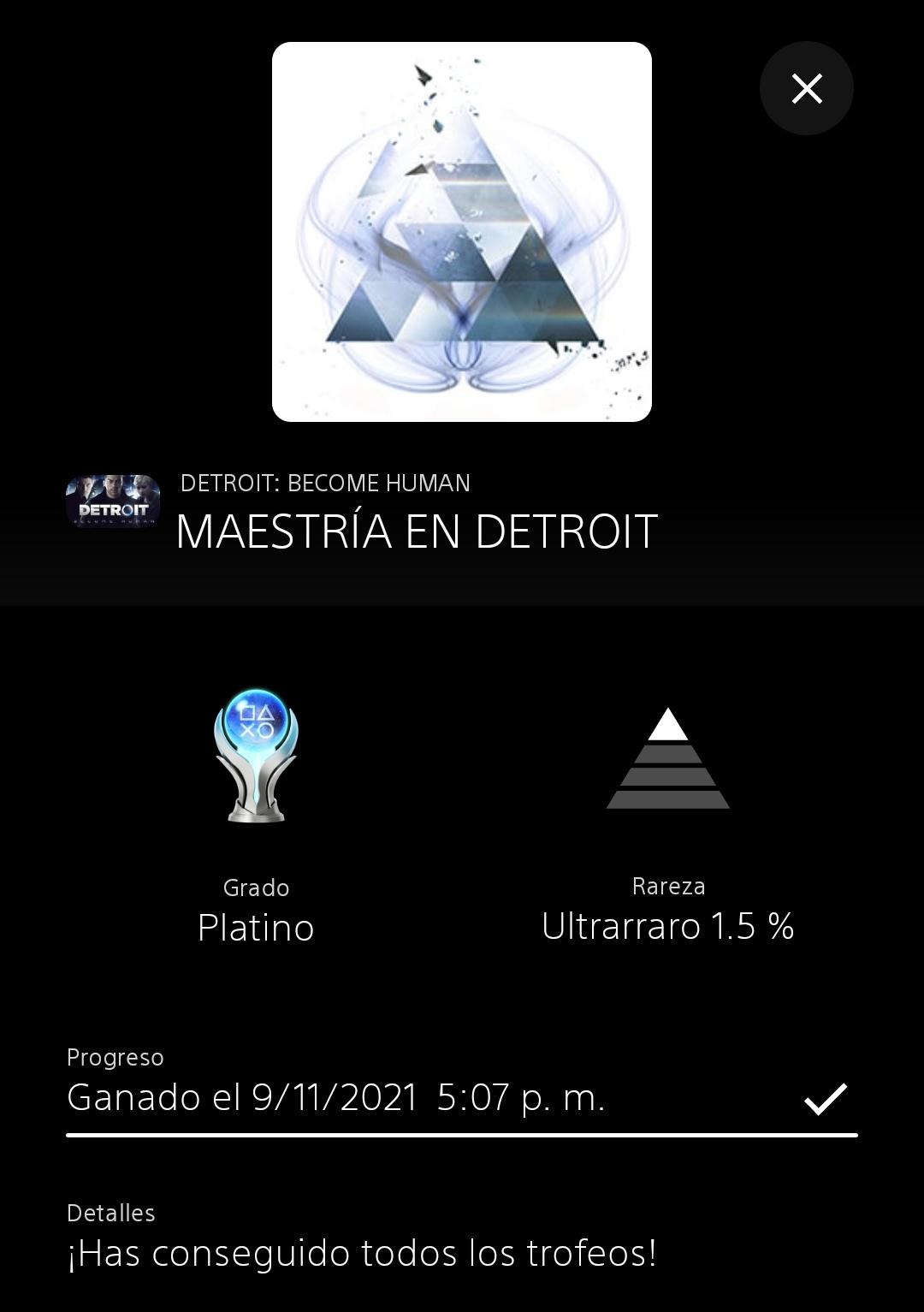

I think Detroit: Become Human was the first game I ever platinum-ed. I was really looking forward to playing it when it was announced, even more so knowing that it was being developed by the creators of Heavy Rain. But Detroit: Become Human seems even better to me in many ways.

One of the things I liked the most was the setting. A fictional Detroit going through a serious economic crisis due to the monopoly of Cyberlife, the company that manufactures the androids. The city is full of interesting details, such as electronic magazines, which put you in context about what is happening outside Detroit. The characters are very well written, and I myself at least became very invested in most of them (Hank, for example). The character modeling, subplots and use of narrative are also great.

One of the drawbacks is that in some outcomes, you are severely punished for resorting to violence (even if it’s justified). And trust me, when you play this, you will know what I’m talking about. These are the kind of decisions that leave you with a bad taste in your mouth if you make the “wrong” choice.

Despite this, it’s a great game IMO :)

Don’t Breathe (2016)