☆ Yσɠƚԋσʂ ☆

- 15.8K Posts

- 11.8K Comments

HasanAbi redemption arc

8·5 小时前

8·5 小时前not to mention the fact that the US didn’t even manage to do a regime change in Venezuela in the end

12·9 小时前

12·9 小时前That particular bit is very much on point. What they’re all really upset about is that the empire is turning on them. They were perfectly fine with the prior arrangement, and they’re all still hoping that things can go back to the way they were. The Europeans in particular are very obviously trying to wait Trump out. It seems like Carney is a bit more realistic about the state of things, and he’s trying to hedge.

17·13 小时前

17·13 小时前In fact, I would argue we’re seeing the reverse of the Cold War playing out right now. China is leading the bigger economic bloc, and it controls the vast majority of global manufacturing. Meanwhile, the US led bloc is rapidly descending into chaos and starting to fight itself already.

16·14 小时前

16·14 小时前I really can’t see how they’d even begin to catch up to China at this point. I expect that the whole piracy on the high seas thing is going to accelerate the transition massively.

I regret to inform you that the text is not the original. :)

nothing to add, @CriticalResist8@lemmygrad.ml nailed it :)

5·1 天前

5·1 天前It certainly lets you see the world a lot more clearly.

15·2 天前

15·2 天前Looks like AWS team tests in production. Also wild they don’t even have a human in the loop to do any sort of review before the agent pushes to prod. It’s an absolute clown show over at Amazon.

3·2 天前

3·2 天前In terms of flexibility for sure, but I’m guessing they’re just going for raw optimisation here to see how fast they can make it go.

3·2 天前

3·2 天前It depends how the chip is designed, with something like FPGA or memristors you could reconfigure the chip itself to support different network topologies and weights. But even with a chip that’s not configurable, this is still pretty useful. Like if you can make a chip for running a full DeepSeek, it can do a ton of very useful tasks right now even without any future upgrades. So, it’s not like outdated chips will become useless. If you set it up for whatever task you need, and it does the job, then you just keep using it for that.

8·2 天前

8·2 天前There was absolutely zero chance of India abandoning billions in trade because the US is mad. Also, the recent farmer protests likely ended up being a decisive factor. Indian economy is heavily reliant on cheap energy from Russia now, and cutting that off would lead to even more unrest.

8·2 天前

8·2 天前TPUs are general purpose accelerators for any neural network. They still have to fetch instructions, manage a programmable memory hierarchy because it has to provide a general purpose platform for anything from a CNN to a Transformer.

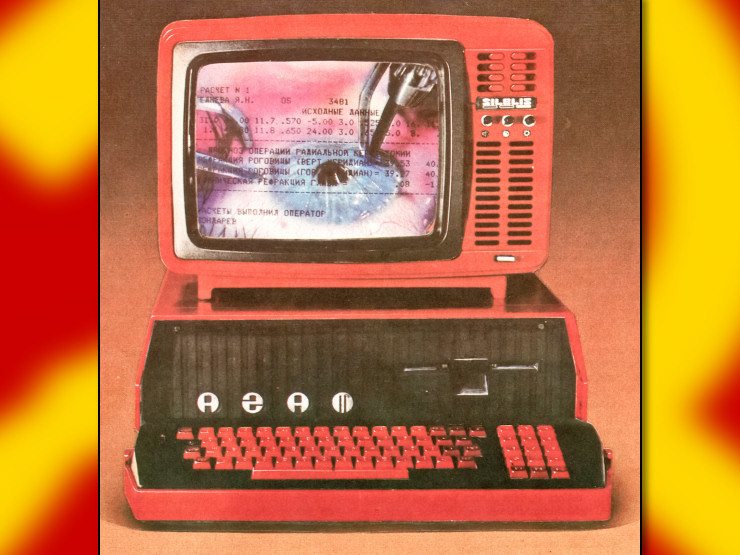

What Taalas is doing amounts to hard-wiring the actual weights and structure of a specific model directly into the silicon. They call these Hardcore Models because the model is expressed directly in the topology of the chip. Their approach eliminates the overhead of fetching instructions or moving data between a separate processor and memory. They bypass the memory wall that plagues GPUs and TPUs by merging storage and computation at the transistor level. And that’s why they see such massive leap in tokens per second and power efficiency. The trade off here is that you sacrifice the ability to run any model in exchange for running one specific model at near instantaneous speeds at a fraction of the cost.

5·2 天前

5·2 天前I think you’ll still need a human in the loop because only a human can decide whether the code is doing what’s intended or not. The nature of the job is going to change dramatically though. My prediction is that the focus will be on making declarative specifications that act as a contract for the LLM. There are also types of features that are very difficult to specify and verify formally. Anything dealing with side effects or external systems is a good example. We have good tools to formally prove data consistency using type systems and provers, but real world applications have to deal with outside world to do anything useful. So, it’s most likely that the human will just work at a higher level actually focusing on what the application is doing in a semantic sense, while the agents handle the underlying implementation details.

4·2 天前

4·2 天前I’ve been using this pattern in some large production projects, and it’s been a real life saver for me. Like you said, once the code gets large, it’s just too hard to keep track of everything cause it overflows what you can keep in your head effectively. And at that point you just start guessing when you make decisions which inevitably leads to weird bugs. The other huge benefit is it makes it far easier to deal with changing requirements. If you have a graph of steps you’re doing, it’s trivial to add, remove, or rearrange steps. You can visually inspect it, and guarantee that the new workflow is doing what you want it to.

8·2 天前

8·2 天前I suspect we’ll have to wait a couple of years for production capacity to expand.

4·2 天前

4·2 天前I’d argue that’s largely where we are already. Most people are using cloud services for practically everything.

I’m guessing they implemented it as proof of concept because it’s a well known model, and has simple architecture. I’m really looking forward to full blown 600+ bln param chips. That’s where shit gets real. And just imagine this stuff applied to robotics. Those Unitree robots with a DeepSeek chip would basically be Star Wars droids.