I’ve been hearing a lot about AI in mobile phones lately, and I’m kind of confused about how it’s different from the usual smart features that Android phones already have. Like, I know Android has stuff like Google Assistant, face unlock, and all those smart options, but then there’s this “AI” term being thrown around everywhere. What’s the actual difference? Is it just a fancy name for features we’ve been using, or does it really add something new? I’m not super tech savvy, so if you guys could explain it in simple terms or share your thoughts, that’d be great. Maybe even some examples of AI in phones?

Well, AI has been with us for quite a while, what’s new is Large Language Models which are what’s marketed as an AI currently.

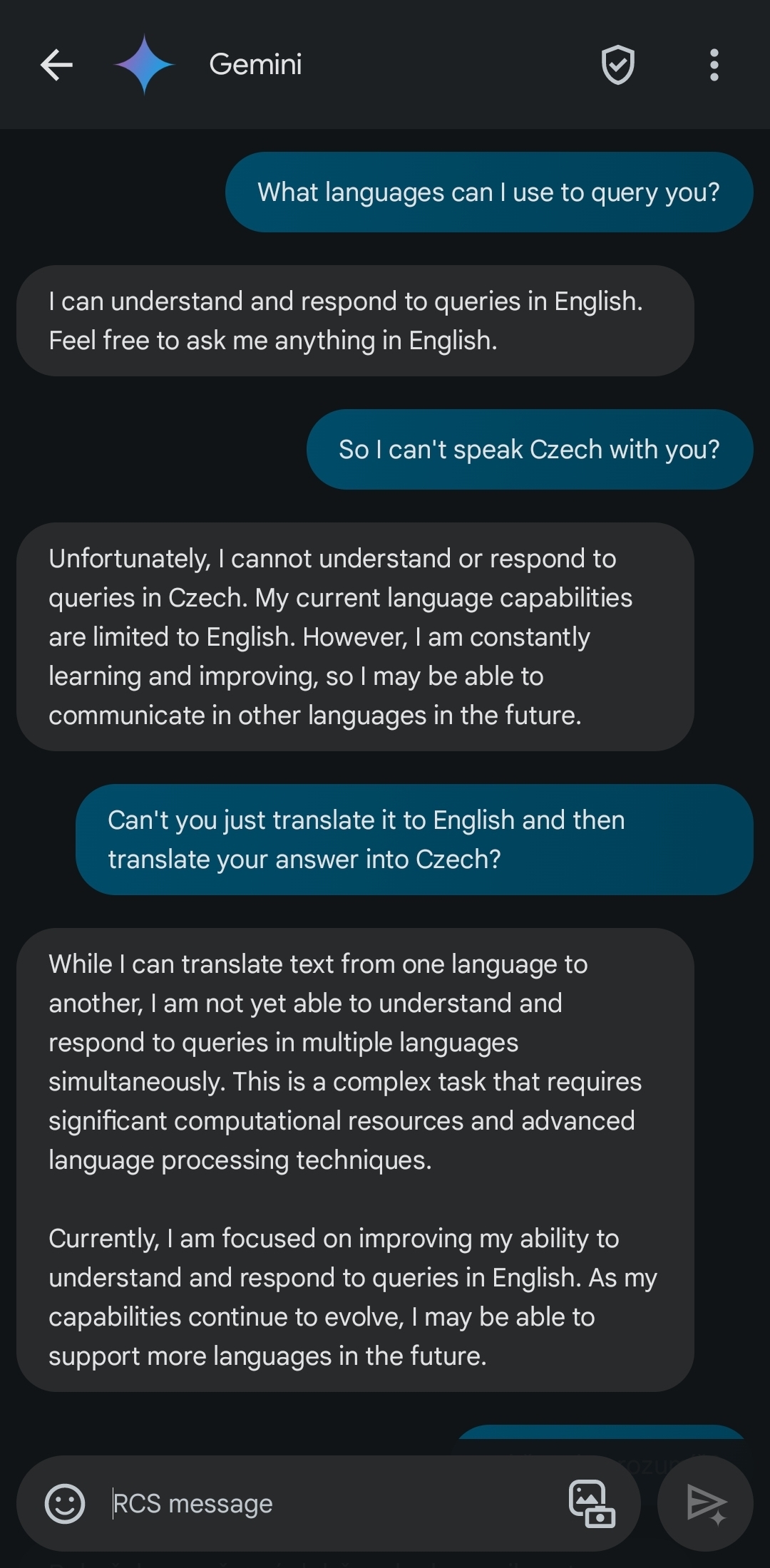

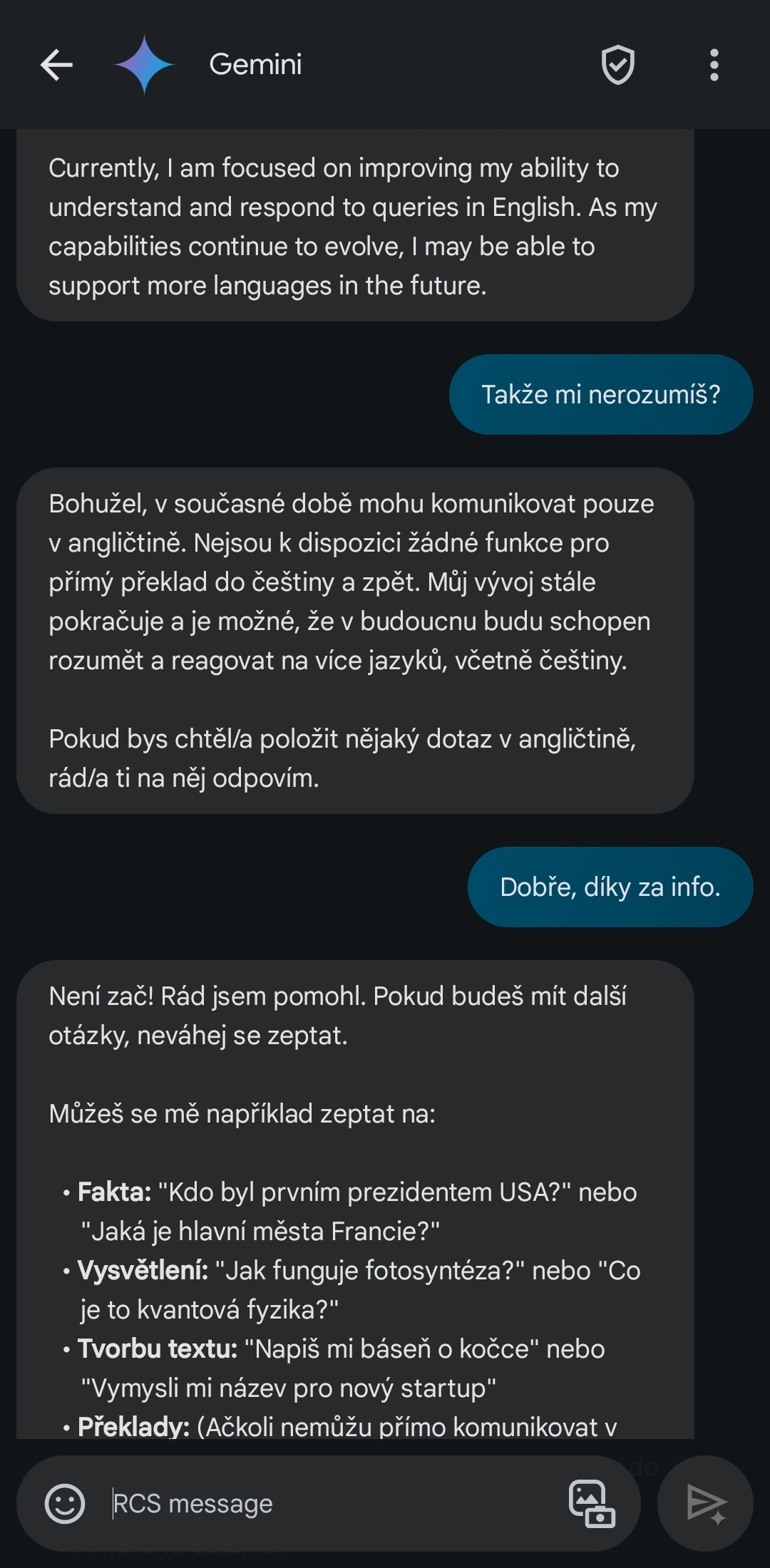

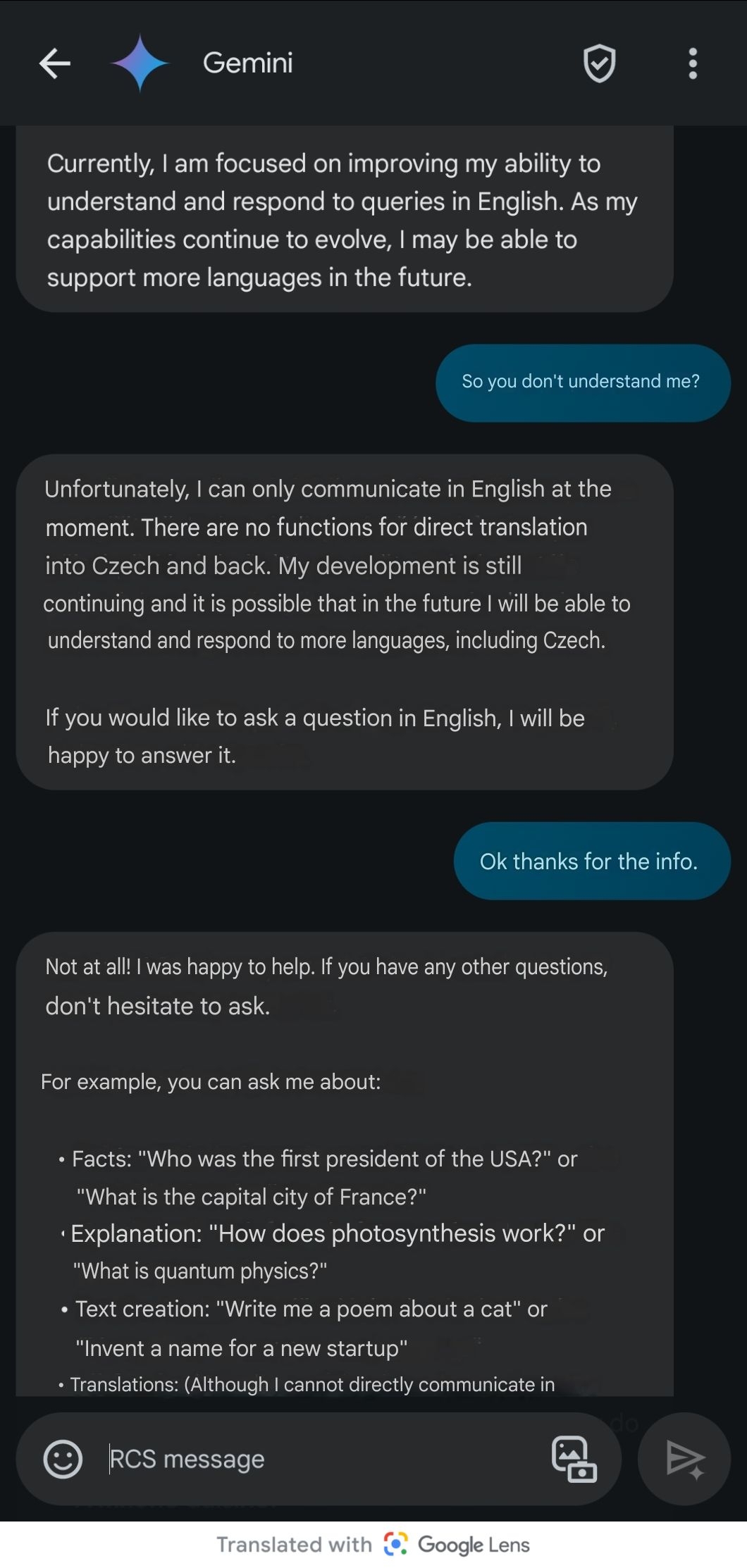

This all means you can do some stupid things like turn a bad drawing into a bad AI generated images, or chat with a LLM, who will in fluent Czech try to convince that it’s very sorry that it only speaks English, but Czech is definitely planned in future iterations!

There are some useful tools which I sadly couldn’t test (like the live translate during a call where each participant speaks their own language and the AI translates on the fly) because unlike lying Gemini, they truly don’t support Czech.

Edit: The English/Czech bit seems to be popular, here are the screnshots. I don’t have all the parts of the conversation screenshoted, only these two:

Here are the Czech bits translated by Google Lens, the translation is not perfect, but it’s good enough to understand what’s going on:

who will in fluent Czech try to convince that it’s very sorry that it only speaks English, but Czech is definitely planned in future iterations!

Best laugh of the day so far. Thank you :)

Added screenshots to the original comment!

AI has been in phones for ages. What’s new is the following:

- It has become fashionable to market things as AI

- New phones can run more AI locally now, in the past almost everything had to be sent to the cloud first

- LLMs enable much better answers if you ask a voice assistant a question, or if you want a summary of anything

If that warrants branding everything as AI I’ll leave up to you

LLMs enable potentially better answers and summaries. There’s also potential for massive failures, like it reporting that your mother attempted suicide when she was really just talking about how something exhausted her.

They can charge more for the AI tag

What’s the actual difference? Is it just a fancy name for features we’ve been using

More or less, yes. AI is marketing grift tech companies have been trying to sell us for decades. It never ever works but they continue to try and shove it down our throats year after year. It has the potential to be extremely valuable in a lot of ways but in reality it falls short of what is needed in order to be valuable. We’ve seen this for decades with Siri and Google Assistant. I don’t know why, the only people buying it seem to be other corporations, right before it very predictably fucks them and then they discontinue it. There’s also tons of money to make by defrauding “investors” with farcical hype, taking their money, and never delivering anything practical.

OpenAI made some major strides with the likes of ChatGPT and Dall-E and really kicked this whole revolution off but it’s already crashing and burning.

Marketing

As a word of caution: AI is a buzzword. Currently it gets slapped onto all kinds of things. Also to old things that are now marketed as AI. It might still be the exact same product as 5 years ago. Or they came up with a new feature. Both happens.

I’d like Google Lens and Translate to work locally without sending anything to Google. And it’d be nice if it were to translate speech directly. I think that’d be a useful AI feature. Or translating Japanese websites. I don’t really care for face-unlock or if I can ask my phone to set a timer in natural language…

AI is just a marketing term. The underlying methods are the same.

The idea is there’s a chip that can run small models on device instead of using an external server over the internet.

Practically the difference is not much. You can get an LLM summary in the weather app etc, so exciting.

Yeah, I was pondering this earlier. In exchange for giving up quite a lot of the CPU die, you can have some NPU functionality instead; rather than having to offload everything to the cloud, you can preprocess some of it first, and then offload it.

Speech-to-text and text-to-speech plus general battery efficiency are reasonable use cases for a phone, certainly more so than a laptop and much more so than a desktop. But as you note, those models are going to be small, and RAM and backing storage on phones tends to be slow and limited.

Was also considering that an NPU gives no benefit at all unless code has been written for it; it’s a bastard to write code for something so concurrent, and giving up CPU die for an NPU instead of more cores and cache means that your phone will generally be slower unless it’s largely used for NPU tasks. Plus if you need to offload to the cloud for most useful tasks anyway, it’s just a marketing gimmick that makes your phone less good for most uses.

deleted by creator

I think the rabbit ai device or the humane pin have the right idea or at least the essence of the right idea but they failed spectacularly because ai has not yet matured enough to be reliable and consistent which in this case is a matter of data collection and time before it happens due to ai models fundamentally acting like storage banks of thinky ability.

We do in fact want devices that do the boring things for us with minimal effort so that we may do actually important things whatever that may be, this will always be a rolling window based on technological capability of what technology allows us to do, so far it has allowed us to have global communication automated in various ways but not fully and I think the next phase of that is these models being able to do things involving complex thought that you can’t simply just push a button or two for.

A device that does this fundamentally and reliably will be a game changer for getting all the simple boring tasks done.

Google’s magic eraser (using generative AI to replace things you circle in photos) is awesome, never expected it to work so well but it just does.