Hi folks. I am a CS major taking a 3rd year course in relational databases. The example DBs we study are pretty much all either a school or a company. On the bright side we get to do a project of our own design with C++ and Oracle DB. Has to be some kind of program that makes use of a reasonably sophisticated schema.

I was thinking I could make a DB program that does economic planning, but I don’t know what direction to go with it, really. Maybe the kernel of it, the usefulness could be, computing everything down to hours of human effort using the LTV. Labour time accounting. For example, we create a profile for what we want the living standard to be, like private and shared square feet per person, food choices, clothing choices, level of convenience of transport etc. Then the program could use a database containing information about the SNLT to produce different products and services to compute what professions would be needed and how much we all need to work, basically.

But like any idea this is starting out huge. So does anybody have ideas for how to make this small but extendable? Or different directions go with it, or totally different ideas that you have?

While I haven’t read it yet myself, I’ve heard that Paul Cockshot’s Towards a New Socialism goes into detail about algorithms that could be used for central planning.

I read it years ago, and I should definitely dig in again and review. Big part of why I want to do everything in labour time as much as possible. However I think he suggests the use of a neural network at one point which is a little over my head for now. I am thinking simpler like the pen and paper material balance planning the Gosplan cdes used to do…

I haven’t read the book yet, but Cockshott seems pretty active on twitter. You could probably hit him up with questions either through twitter or his university email, and I bet he’d be more than willing to give you some pointers for reading material.

Regarding the neural network, could the whole thing be represented by a linear regression? I’m shooting blind here, but depending on the complexity of your project you could use simpler but easier models that do what the neural net is supposed to do.

No neural networks are needed, but familiarity with linear algebra and matrix operations is necessary.

Outside of his towards a new socialism book, some of his earlier youtube videos on TNS get into some of the math.

Any programming language with matrix libraries would work.

Haven’t done any linear algebra or dug into matrices yet… do you think light study of the basics on KhanAcademy would be enough? I’ve done calc 1 and 2 and discrete math.

For something quick, chapter 2 of Goodfellow’s book is a good enough introduction.

For a longer but still intuitive material, 3blue1brown has a whole series of videos on Linear Algebra, with a lot of visualisation.

Linear algebra is much simpler than calculus in my opinion, you shouldn’t have much problems once you get your head around the basics. It’s also much more useful in programming than calculus. But it’s good to know both.

I’d recommend finding a specific course on matrix / linear algebra. It’s not an easy field but having some calculus should help.

Paul Cockshott also has a Youtube Channel where he talks about such things. I haven’t read the book either, but doing economic planning with Neural Networks sounds a little adventurous, because generally it is hard to reason about it’s decision processes. And having a plan that you can’t explain is risky at best.

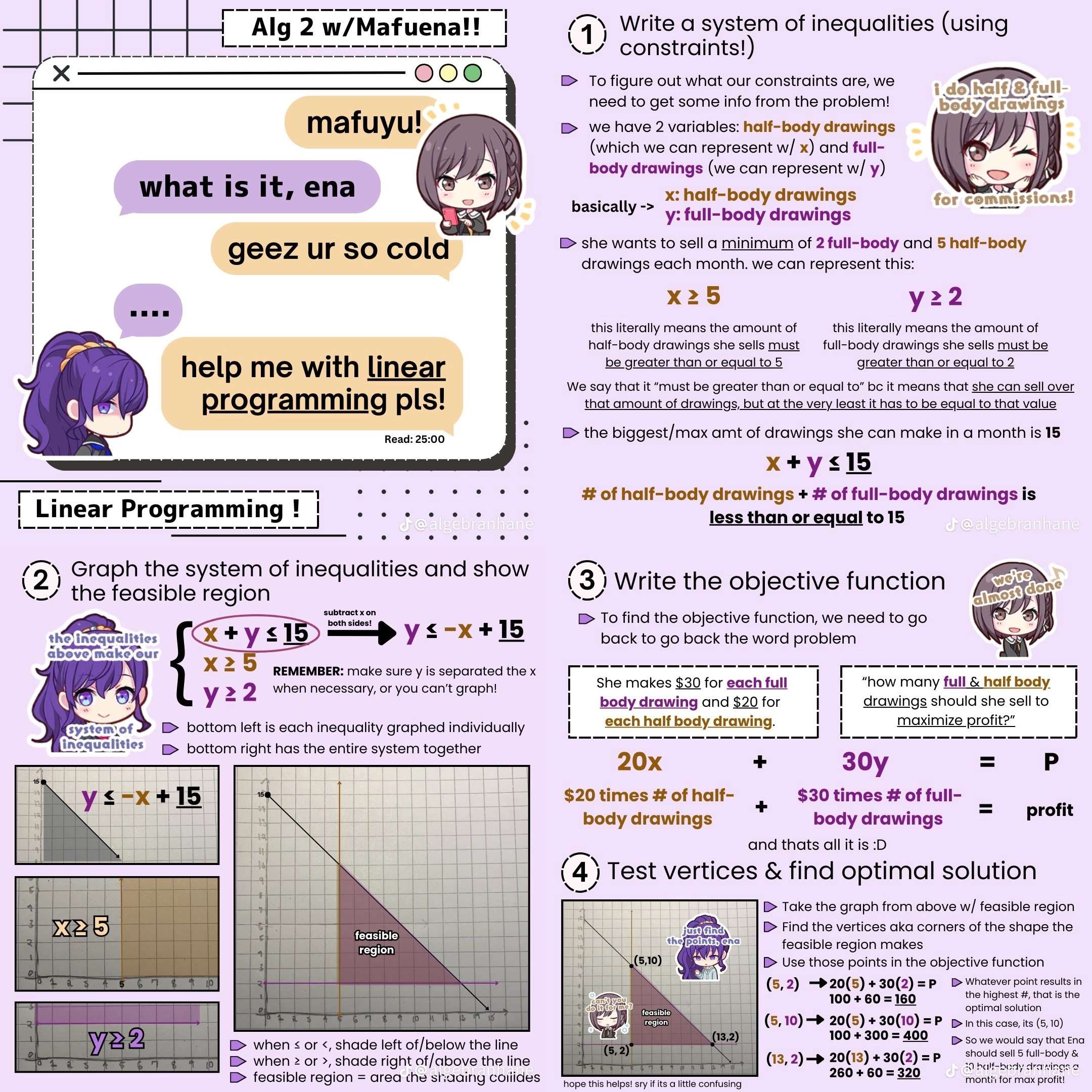

Cockshott also has done some work with Thomas Härdin about economic planning with Linear Programming [LP] and Mixed Integer Linear Programming [MILP]. This also slots in nicely with your original question. As @[email protected] has said, Databases are only part of the solution here. Economic Planning is an optimization problem and you need methods (like LP) to solve it. For more complex problems (like economic planning) you will need an understanding in linear algebra and matrices, here is a nice short (and cringe) introduction to LP that I found in some math discord a while back:

But note that they use a graphical approach to solve the problem, of course there are also numeric approaches to solve an LP problem.

Hi long time lurker, first time caller. Wow this is a great question.

First a database is just data at rest; you need to do something with the data therein. Others have mentioned Linear Algebra and “Towards a New Socialism” (the Harmony Algorithm) and I want to speak to those first.

Lets start with linear algebra: good, bad, and ugly. In a (basic) linear algebra approach you’d pose problems like so:

Ax + b = yWhere A is a matrix of input-output relations: a massive matrix with each row representing a recipe for something you’d want to produce and each column being some type of input. For example perhaps 1 unit of leather takes 1 hour of labor and consumes 1 cow, you’d put a 1 in the leather column and negative 1’s in both the labor and cows column. The vector b kind of represents things you’d get automatically (And we’ll assume it’s all zeros and toss it). And that leaves y as the goal vector (e.g. how many iphones you want to produce, how many cars, etc). Linear algebra, and the above formulation, allows you to work backwards and solve for x. This tells you how much metal you need, power, labor hours, cows, etc.

The good is it’s fast, reliable and well defined if you’re using it right. The bad is it’s quite limited: Take energy production for example – there are several ways to do this: wind, solar, gas, nuclear. Linear algebra isn’t going to handle this well. And what happens if some outputs are both end-products but also inputs to other products? Things get messy.

But the ugly is that the linear algebra approach is literally just a system of equations that represent a mapping between two different high dimensional spaces. Every column and every row should be measuring a totally unique thing. This is not an ideal situation for planning, here outputs of one industry are inputs to another.

This limitation can be creatively addressed by subdividing the problem and combining the answers. Perhaps splitting by region, or looking at each industrial sector in isolation. Which brings us to a good time to mention the Harmony algorithm which is one approach to subdividing the problem and recombining the answers.

But before we go on from here, it’s worth mentioning that there is no guarantee that solutions built from solutions to subproblems will be optimal. But they might be: it depends on the problem domain.

However there are more sophisticated models, such as the linear programming model. In this model you recast your planning problem into a description like so:

Maximize happiness Where happiness is 1.5 * number of iphones, + -.5 * level of pollution + ... AND acres of land used < 1000 AND number of labor hours used is < 50000 AND number of iphones = labor hours * .01 AND level of pollution = labor hours *.001 AND ...This model is much nicer: we have an objective to maximize (the happiness equation) and we can accommodate multiple production processes as constraints on the solution. We can also accommodate that making leather also produces beef, and that raising cows one way produces fertilizer but raising them another way produces pollution shit pools. So that solves the energy problem (we can also encode all of the different ways energy can be produced). We can also set hard limits on negative things (e.g. the max CO2 we want to emit).

But this model is much, much slower. I believe the largest solvers on the largest supercomputers can handle ~8 billion variables. But each production process you add could require dozens to hundreds of variables in the final model. So you’re back to divide and conquer approaches.

And we’ve barely begun! We haven’t looked at how you decide what to produce. We haven’t even looked at future planning (deciding what capital goods to invest in) nor geography (Where do you build or produce these things? Where do you run rail lines? Where is the labor that needs the jobs or has the skills?).

And what about your solution quality? Do you need the best possible plan or will any reasonably good plan do (e.g. top 10% of plans)? And what about the variety of possible solutions? Maybe one good plan is based on railway lines and collective agriculture while a different really good plan achieves the same with densification and high tech industries. How do you decide what’s best, but more importantly: Did your planning approach inform you that you had these very different options?

Nor have we looked at things like Cybersyn – how do you monitor your industries and identify weird-shit-happening-now in the production process (e.g. delays in shipping, sudden bursts of productivity, rising worker unhappiness, etc) and route around it?

I don’t think there is a planning “solution” but there are planning “tools”. All these approaches and more should be used by a dedicated planning department that can plan and correct for many timescales and contingencies. This department would also need to communicate the plans to appropriate stake-holders.

Anyway, I hope you enjoyed reading this, I certainly enjoyed writing it :-)

I’m starting to think I should make this my whole undergrad research project. I could develop a tiny part of it for this DB course. Thanks for your response, lots of helpful stuff here and will come back to it and others’ comments…

Databases are not really about what information is stored, but instead how it’s going to be used. Compared to writing software you have to kinda think backwards while designing them. So not “we create a profile to calculate everything that goes into the economic planning”, but “what simplest parts constitute the economy”.

To give you a direct example - you’d need a dataset for people

Person()who use and manipulate the economy, and another for stuff made of individualThing()that together form it. You can say that people have alabour_valueproperty to them. And things could have their production/maintenanceThing().labour_costexpressed in thatPerson().labour_value.From there it’s about actual programming and understanding the smaller parts of the problem as you solve it step by step. You can delve deeper into additional relationships between people and stuff-

Person().basic_needs_cost,Thing().lifetime,Thing().transportation_cost(Person().location), whatever.To put it very simply, programming is about having a refined final idea and breaking it into simpler parts. Data architecture, on the other hand, is taking the basic components and building the details on top of them.

this board is a godsend.