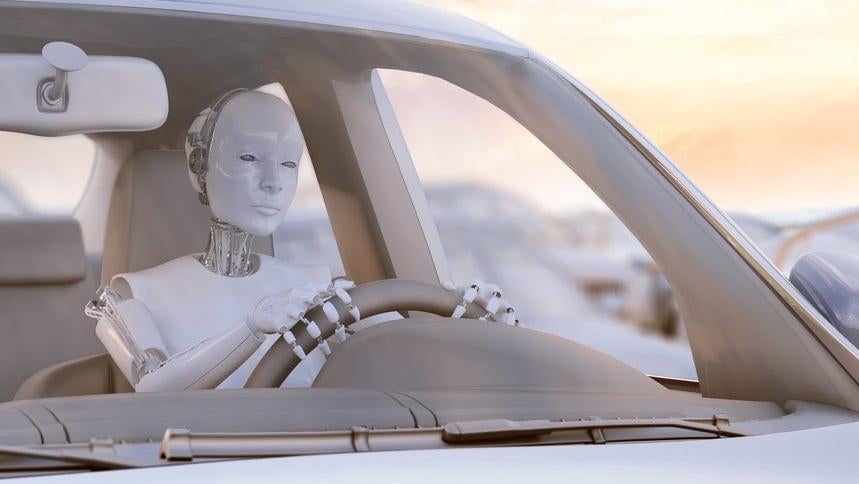

New research shows driverless car software is significantly more accurate with adults and light skinned people than children and dark-skinned people.

Built by Republican engineers.

I’ve seen this episode of Better Off Ted.

Veronica: The company’s position is that it’s actually the opposite of racist, because it’s not targeting black people. It’s just ignoring them. They insist the worst people can call it is “indifferent.”

Ted: Well, they know it has to be fixed, right? Please… at least say they know that.

Veronica: Of course they do, and they’re working on it. In the meantime they’d like everyone to celebrate the fact that it sees Hispanics, Asians, Pacific Islanders, and Jews.

That show ended too soon.

One reason why diversity in the workplace is necessary. Most of the country is not 40 year old white men so products need to be made with a more diverse crowd in mind.

Humans have the same problem, so it’s not surprising.

Yeah, this is a dumb article. There is something to be said about biased training data, but my guess is that it’s just harder to see people who are smaller and who have darker skin. It has nothing to do with training data and just has the same issues our eyes do. There is something to be said about Tesla using only regular cameras instead of Lidar, which I don’t think would have any difference based on skin tone, but smaller things will always be harder to see.

I can spot kids and dark skinned people pretty well

Seems this will be always the case. Small objects are harder to detect than larger objects. Higher contrast objects are easier to detect than lower contrast objects. Even if detection gets 1000x better, these cases will still be true. Do you introduce artificial error to make things fair?

Repeating the same comment from a crosspost.

All the more reason to take this seriously and not disregard it as an implementation detail.

When we, as a society, ask: Are autonomous vehicles safe enough yet?

That’s not the whole question.

…safe enough for whom?

Also what is the safety target? Humans are extremely unsafe. Are we looking for any improvement or are we looking for perfection?

This is why it’s as much a question of philosophy as it is of engineering.

Because there are things we care about besides quantitative measures.

If you replace 100 pedestrian deaths due to drunk drivers with 99 pedestrian deaths due to unexplainable self-driving malfunctions… Is that, unambiguously, an improvement?

I don’t know. In the aggregate, I guess I would have to say yes…?

But when I imagine being that person in that moment, trying to make sense of the sudden loss of a loved one and having no explanation other than watershed segmentation and k-means clustering… I start to feel some existential vertigo.

I worry that we’re sleepwalking into treating rationalist utilitarianism as the empirically correct moral model — because that’s the future that Silicon Valley is building, almost as if it’s inevitable.

And it makes me wonder, like… How many of us are actually thinking it through and deliberately agreeing with them? Or are we all just boiled frogs here?

Can everyone who feels the need to jog at twilight hours please wear bring colors? I get anxiety driving to my suburban friends.

They should. But also, good. You should absolutely feel anxiety operating a multi-ton piece of heavy machinery. Even if everybody was super diligent about making themselves visible, there would still be the off cases. Someone’s boss held them late and they missed the last bus so now they need to walk home in the dark when they dressed expecting to ride home in the day. Someone is down on their luck and needs to get to the nearest homeless resource and doesn’t have access to bright clothes. Drivers should never feel comfortable that obstacles will always be reflective and bright. Our transportation infrastructure should not be built to lull them into that false sense of comfort.

The ones I’m talking about aren’t homeless. These are well dressed yuppies in new hoodies jogging their suburban neighborhood. The cars are packed along the sidewalk at night because everyone is at home and they just jut out from between two of them with their hoodies up.

I’m not complaining that I have to drive causally. I’m complaining that I have an elevated hearth rate.

It wasn’t meant to be an attack 🙂. Absolutely, those people should wear proper PPE (bright clothing) when running in the dark. But you have an elevated heart rate because your body is telling you you are in a dangerous situation. And it’s right. Too many drivers (not an accusation at you) either ignore those signals (and mentally normalize driving recklessly) or blindly focus on removing the triggers for those signals at the expense of the safety of themselves and others (by buying larger vehicles or by voting for politicians that make our infrastructure even more hostile to pedestrians than it already is). At the end of the day, driving a car is dangerous. Especially on residential streets where pedestrian interactions are possible or even common). And that won’t change as long as it is a residential street. Being aware of the danger is a positive, if inconvenient, reminder to drive cautiously.

I wonder what the baseline is for the average driver spotting those same people? I expect it’s higher than the learning algo but by how much?

Agest and racist cars?

They touch on this topic in Cars 2.

Italian fork lift pit crew FTW!

More like programmers who didn’t consider putting in more folks that don’t look like them in the training datasets. Unconscious bias strikes again.

I would say it’s because dark stuff on dark background is harder to detect than other way around. Roads are dark, shadows are dark, pavement is sometimes dark, houses are quite often dark, so it blends.

I’d rather call for more powerful algorithms than bigger data sets.

Misleading title?

How so?

because proper driverless cares properly use LIDAR, which doesn’t give a shit about your skin color.

And can easily see an object the size of a child out to many metres in front, and doesn’t give a shit if its a child or not. It’s an object in the path or object with a vector that will about to be in the path.

So change 'Driverless Cars" to “Elon’s poor implementation of a Driverless Car”

Or better yet…

“Camera only AI-powered pedestrian detection systems” are Worse at Spotting Kids and Dark-Skinned People

Ok, but this isn’t just about Elon and Tesla?

The study examined eight AI-powered pedestrian detection systems used for autonomous driving research.

They tested multiple systems to see this problem.

The authors made an assumption that ad companies are using the same models as the open source project they tested this with. That’s complete nonsense and not near reality at all due to the enormous difference in data used (source, I’m working with this tech).

Unless you test with the exact same models as are used in the cars this really proves nothing and really shows that the authors own misunderstanding and bias (eg lidar vs camera discussions, or overall size of children vs adults).

First impression I got is driverless cars are worst at detecting kids and black people than drivers

I think that’s more it’s a headline that can be misinterpreted than misleading. I read it as it was detailed later, as worse at spotting kids and dark-skinned folks than at adults and light-skinned people. It can be ambiguous.

Eh, it’s not as bad as a typical news article title.

WEIRD bias strikes again.

deleted by creator

A stealth bomber gives less signal because of angles and materials and how they interact with radar, not because they are small or painted a dark color.

If a dark skinned person and a white skinned person are both wearing the same pants and long sleeved shirts, why would skin color be a factor beyond some kind of poorly implemented face recognition software like auto focus on cameras that also don’t work well for dark skinned folks? Especially when some of the object recognition is just looking for things in the way, not necessarily people.

No, it is not some simple explanation based on people’s eyes from the driver’s seat while driving in the dark. It is a result of the systems being trained based on white adults (probably men based on most medical and tech trials) instead of being trained on a comprehensive data set that represents the actual population.

deleted by creator

While some of these cars use radar to an extent. I believe this is mostly focusing on image recognition, which is from a camera. Both are distinctly different in how they recognize objects.

Image recognition relies on cameras which relies on contrast. All of which is dependent on light levels. One thing to note about contrast is that it’s relative to its surroundings. I think this situation is more similar to your eyes recognizing things while driving in the dark than you think. I suggest you research how these things work before making claims.

So white people have higher contrast than dark skinned people?

Yes, in fact. This has been a huge challenge in photography algorithms for decades.

HP cameras couldn’t detect black people in 2009: http://edition.cnn.com/2009/TECH/12/22/hp.webcams/index.html

Google classified black people as gorillas in 2015: https://www.theverge.com/2018/1/12/16882408/google-racist-gorillas-photo-recognition-algorithm-ai

Zoom had issues with black faces and dark backgrounds in 2020: https://onezero.medium.com/zooms-virtual-background-feature-isn-t-built-for-black-faces-e0a97b591955

A quick primer in colour: recall that light colours reflect more light than dark colours. This means image recognition devices relying on cameras using standard spectrums (i.e. not infrared) receive less light into the sensor when pointed at someone with dark skin. The problem is constant, but less pronounced depending on the background. That is, a black person against a white background would be easier for an algorithm to identify as a person than said black person against a mixed or dark background.

All of those had issues for the sensors and recognition aoftware because their data set to determine what a face is was mostly white people.

Just because something is harder doesn’t excuse then for not putting in the effort to get it right.

It’s not necessarily effort. Data can be expensive and difficult to obtain. If the data doesn’t exist then they have to gather it themselves which is even more expensive.

I agree that they should be making sure they can account for both cases as much as possible. But you have to remember that from the frame of reference of the model being trained and used in these instances, the only data they’re aware of is the data they were trained on and the data they are currently seeing. If most of the data samples in the entire world feature white people 60% of the time it’s going to be much better at recognizing white people. I don’t think anyone is purposely choosing to focus on white people; I think that those tend to be the data samples that are most easily obtained or simply the most prolific.

I also think we need to take into account quality of data. As mentioned before, contrast plays a big role in image recognition. High contrast with background results in, on average, better data samples and a better chance of usable data. Training models on data that is not conclusive on ambiguous can lead to ineffective learning and bad predictive scores.

I don’t think anyone is saying this isn’t a problem but I also don’t believe that this is a willful failure. I think that good data can be difficult to get and that data featuring white people tends to have easier time using image recognition successfully.

Someone else mentioned infrared imaging, which is a good idea but also more money and adds an extra point of failure. There are pros and cons to every approach and strategy.

In some cases the data sets were only white, but engineers have been cognisant of this issue for decades so I don’t think that’s as common as you might believe. More frequently it’s just physics.

As for “putting in the effort,” companies are doing this, to their detriment. Ensuring that a small proportion of their customer base has a perfect experience is very expensive. In business the calculation between cost and profit is very important. If you’re arguing that companies should provide unprofitable products so that your sensibilities can be assuaged then I disagree. No company has a duty to provide a product to you.

It is a result of the systems being trained based on white adults

It’s both. The system is racist because of how it was trained and because its developers were not black, therefore “it worked for them” during development. And because black people are harder for cameras to see, especially in low light environments.

Even with clothes on, the dark skin, in a dark environment, “breaks” the “this is human” pattern that the ai expects to see, since the ai can see only the clothes. It is like camouflage. Can the ai “see” a pair of pants? Maybe, eventually but it still reduces the certainty, since the ai sees fewer “signs”.

Cameras should be using infrared to look for objects in the dark and not fucking hoping it looks slightly less dark than the surrounding pixels. It being “dark” is not an excuse. Cars drive at night and need to be engineered around that fact.

Edit: note this is about cameras. Ideally, you’d use radar which wouldn’t care but if you are just dual purposing cameras used for driving, this is the bare minimum.

deleted by creator

These systems are often trained on data obtained from driving the car around. I think the only real solution would be planning routes through more diverse neighborhoods. Although any company that is taking this seriously from a safety perspective has multiple radars and a top mounted LiDAR on their vehicles. Those sensors should be sufficient for detecting humans regardless of race even in a completely dark environment. Relying solely on camera data is just asking for problems for this and many other reasons.

Except that’s not the source of this problem. AI can be great at detecting patterns with little data, if it’s properly trained. But this article is clear that the reason of this failure is in the lack of training data. This means that the AI never learned kids and dark-skinned people exist and it’s unreliable in detecting them.

It is easier to see dark object on bright background.

That’s only part of it though. This issue is almost as old as we have had similar image/facial recognition technologies. Data is where models get their conclusions from.

Yes but isn’t it easier to say RACISM

It’s not a discriminatory bias

You don’t know that.

Speaking as someone who inherited a computer vision codebase from Asian devs and quickly found that it didn’t work on white skin…

Implementation decisions matter, and those decisions will always be biased towards demonstrating successful output for the people who plan, bankroll, and labor on the project.

How much of the 20% or 7.5% difference in detection is due purely to inevitable drawbacks of size and skin tone?

Who knows.

What we do know is that we did measure a difference, and we do live in a culture where we’re more likely to hear a CEO say:

“It works!” …and then see an article months later that adds “…except for children and black people.”

rather than:

“It doesn’t work!” …and then see an article months later that adds “…except for adults and white people.”

And that fact means we should seriously consider whether our attention (and intention) is being fairly applied here.

Wow. that’s all kinds of incorrect

It’s not a discriminatory bias or even one that can really have anything done about it.

It is absolutely data training bias. Whether it is the data that ML was trained on or the data that programmers were trained on is irrelevant. This is a problem of the computer not recognizing that a human is a human

It’s purely physics.

It is not. See below:

Is it harder to track smaller objects or larger ones?

No, not if the scale of your hardware is correct. A 3’ tall human may be smaller than a 6’ one, but it is larger than a 10” traffic light lens or a 30” stop sign. The systems do not have quite as much trouble recognizing those smaller objects. This is a problem of the computer not recognizing that the human is a human.

Is it harder for an optical system to track something darker. In any natural scene.

Also no. If that were the case, then we would have problems with collision bias against darker vehicles, or not being able to recognize the black asphalt of the road. Optical systems do not rely on the absolute signal strength of an object. they rely on contrast. A darker skin tone would only have low contrast against a background with a similar shade, and that doesn’t even account for clothing which usually covers most of a persons body. Again, this is a problem of the computer not recognizing that the human is a human.

smaller and darker individuals have less signal. Less signal means lower probability of detection,

No, they have different signals. that signal needs to be compared to the background to determine whether it exists and where it is, and then compared to the dataset to determine what it is. This is still a problem of the computer not recognizing that the human is a human.

It’s the same reason a stealth bomber is harder to track than a passenger plane. Less signal.

Close, but not quite.

- In this case the “less signal” only works because it is compared to a low signal background, creating a low contrast image. It is more like camouflage than invisibility. Radar uses a single source of “illumination“ against a mostly empty backdrop so the background is “dark”, like looking up at the night sky with a flashlight.

- The less signal is not because the plane is optically dark. It has a special coating that absorbs some of the radar illumination and a special shape that scatters some of the radar illumination, coming from that single source, away from the single point sensor. Humans of any skin tone are not specially designed to absorb and scatter optical light from any particular type of light source away from any particular sensor. Even at night, a vehicle should have a minimum of 2 headlights as sources of optical illumination (as well as streetlights, other vehicles. buildings, signs and other light pollution) and multiple sensors. Furthermore, the system should be designed to demand manual control as it approaches insufficient illumination to operate.

This is a problem of the computer not recognizing that the human is a human.

You sound like an imaging specialist with no experience

I’m sure that will be of great comfort to any dark-skinned person or child that gets hit.

If those are known, expected issues? Then they had better program around it before putting driverless cars out on the road where dark-skinned people and children are not theoreticals but realities.

In order to make the software detect the same you have to make it detect white adult less.

Comparing the performance between races says nothing about how safe a driverless car is. I am sure that the chances of a human hitting a dark skinned person dwarfs the chances of a driverless car. Trying to convince people driverless cars are racist only delays development, adoption and lawmaking which means more flawed meatbags behind the wheel which means more car accident deaths.

What he’s saying is these aren’t issues, they’re like saying a masculine voice can be heard from further away. Deeper voices just carry better

Part of it is bias/training data - we can fix that. But then you’re still left with the fact children are smaller and dark skinned people are darker - if you use the human visible range of light (which most cameras do), they’re always going to be harder to detect than larger more reflective people.

Our eyes and brains have an insane ability to focus and deal with varying levels of light, literally each cell adapts individually to each wavelength. We don’t have much issue picking out anyone until it becomes extremely dark or extremely far away - it’s not because the problem is easy, it’s because humans are incredible at it

Thank you.

You seem to be one of the people who understand this better.

And even humans are not incredible at it. It’s just inherently harder to identify the areas where there are less signal. I’d love to see a study, but see my edit and actually quantifying the equality we’re after.

Reality/physics/science/PDEs (whatever) work on “differences”. The less difference, the harder.

Okay but being better able to detect X doesn’t mean you are unable to detect Y well enough. I’d say the physical attributes of children and dark-skinned people would make it more difficult for people to see objects as well, under many conditions. But that doesn’t require a news article.

But that doesn’t require a news article.

Most things I read on the Fediverse don’t.

Elon, probably: “Go be young and black somewhere else”

Those types of people are the ones who Elon Musk calls “acceptable losses.”

What about hairy people? Hope cars won’t think I’m a racoon and goes grill time!

Lmao

Unfortunate but not shocking to be honest.

Only recently did smartphone cameras get better at detecting darker skinned faces in software, and that was something they were probably working towards for a decent while. Not all that surprising that other camera tech would have to play catch up in that regard as well.

deleted by creator

This is Lemmy, so immediately 1/2 the people here are ready to be race-baited and want to cry RaCiSm1!!1!!

This place is just as bad as Reddit. Sometimes it’s like being on r/Politics. I’m not sure why they left if they just brought the hyper political toxicity with them.

I’d go so far as to claim that sometimes it is worse than Reddit. Sometimes.

Not racism, but definitely (unconscious) bias of the researchers

Apparently nighttime and black people are both dark in color. Nobody thought to tell the cameras or the software this information.