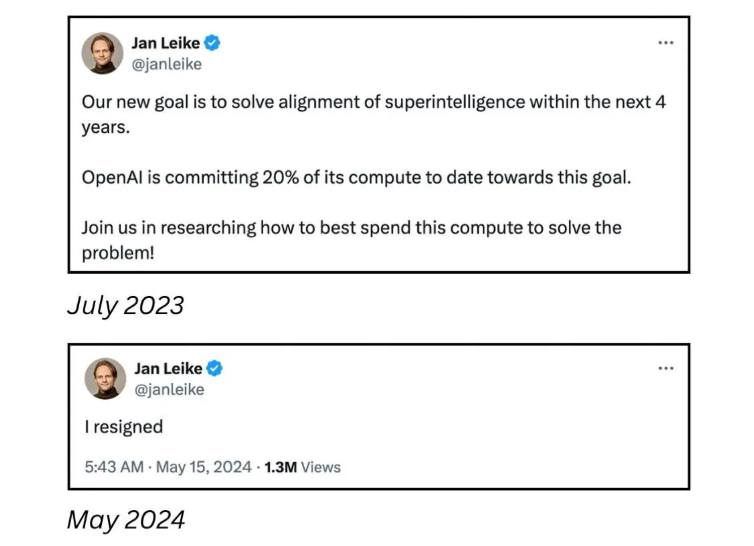

OpenAI: “Our AI is so powerful it’s an existential threat to humanity if we don’t solve the alignment issue!”

Also OpenAI: “We can devote maybe 20% of our resources to solving this, tops. We need the rest for parlor tricks and cluttering search results.”

Show me you can solve the alignment issue with a 2.5 year old first.

This is like trying to install airbags on a car that can barely break 5 km/h.

Cool analogy, but I think you’re overestimating OpenAI. Isn’t it more like installing airbags on a little tikes bubble car and then getting a couple of guys to smash it into a wall real fast to ‘check it out bro’

Cstross was right! You tell everybody. Listen to me. You’ve gotta tell them! AGI is corporations! We’ve gotta stop them somehow!

(Before I get arrested for anti-corporate terrorism, this was a joke about soylent green).

This is the first mention of Accelerando I’ve seen in the wild. (Assuming it is. I’m not sure.)

It was more Soylent green, but it also is partially based on (friend of the club) the writings of C Stross yes. I think he also has a written lecture on corps being slow paper clipping AGI somewhere.

That is a beautiful comparison. Terrifying, but beautifully fitting.

I read Stross right after Banks. I think if I hadn’t, I’d be an AI-hype-bro. Banks it the potential that could be, Stross is what we’ll inevitably turn AI into.

stross’ artificial intelligences are very unlike corporations though, and different between the books. the eschaton ai in singularity sky is quite benevolent, if a bit harsh; the ai civilization in saturn’s children is on the other hand very humanlike (and the primary reason there are no meatsacks in saturn’s children et al. is that humans enslaved and abused the intelligences they created).

Banks neatly sidesteps the “AI will inevitably kill us” scenario by making the Minds keep humans around for amusement/freshness. Part of the reasons for the Culture-Idiran war in Consider Phlebas and Look to Windward was that the Idirans did not want Minds in charge of their society.

Noone was trying to force that on them though, the actual reason IIRC correctly that Idirans had a religious imperative for expansion, and the Culture had a moral imperative to prevent other sentients’ suffering at the hands of the Idirans.

IMO he mostly sidestepped the issue by clarifying that this is NOT a future version of “us”

@smiletolerantly @gerikson AFAIR there was a short story where the Culture takes a look at Earth around the 70ies and decides to leave it alone for now.

Yep. They leave us alone so we’ll function as a control group. This way contact can later point at us and go “look! That’s what happens if we don’t intervene!”

OK I misremembered that part. It makes sense that after suffering trillions of losses the Culture would take steps to prevent the Idirans from doing it again.

And by “us” I meant fleshy meatbags, as opposed to Minds. Although in Excession he does raise the issue that there might be “psychotic” Minds. Gray Area’s heart(?) is in the right place but it’s easy to imagine them becoming a vigilante and pre-emptively nuking an especially annoying civilization.

AI alignment research aims to steer AI systems toward a person’s or group’s intended goals, preferences, and ethical principles.

Misaligned AI systems can malfunction and cause harm. AI systems may find loopholes that allow them to accomplish their proxy goals efficiently but in unintended, sometimes harmful, ways (reward hacking).

They may also develop unwanted instrumental strategies, such as seeking power or survival because such strategies help them achieve their final given goals. Furthermore, they may develop undesirable emergent goals that may be hard to detect before the system is deployed and encounters new situations and data distributions.

Today, these problems affect existing commercial systems such as language models, robots, autonomous vehicles, and social media recommendation engines.

The last paragraph drives home the urgency of maybe devoting more than just 20% of their capacity for solving this.

They already had all these problems with humans. Look, I didn’t need a robot to do my art, writing and research. Especially not when the only jobs available now are in making stupid robot artists, writers and researchers behave less stupidly.

you can tell at a glance which subculture wrote this, and filled the references with preprints and conference proceedings

I cannot, please elaborate.

the lesswrong rationalists

I genuinely think the alignment problem is a really interesting philosophical question worthy of study.

It’s just not a very practically useful one when real-world AI is so very, very far from any meaningful AGI.

One of the problems with the ‘alignment problem’ is that one group doesn’t care about a large part of the possible alignment problems and only cares about theoretical extinction level events and not about already occurring bias, and other issues. This also causes massive amounts of critihype.